SaaS Security

Onboard a Databricks App to SSPM

Table of Contents

Expand All

|

Collapse All

SaaS Security Docs

Onboard a Databricks App to SSPM

Connect a Databricks instance to SSPM to detect posture risks.

| Where Can I Use This? | What Do I Need? |

|---|---|

|

Or any of the following licenses that include the Data Security license:

|

To run scans of your Databricks instance, SSPM connects to the Databricks instance by

using information that you provide. Once SSPM connects, it scans the Databricks

instance and will continue to run scans at regular intervals.

You can onboard a Databricks instance by using login credentials or by authenticating

to an API though a Databricks-managed service principal that you create. Connecting

by using login credentials enables SSPM to complete posture configuration scans for

misconfigured settings. Connecting by using a service principal enables SSPM to

complete identity scans to detect account risks.

Onboard a Databricks App to SSPM Using Login Credentials

Connect a Databricks instance to SSPM to detect posture risks.

For SSPM to detect posture risks in your Databricks instance, you must onboard your

Databricks instance to SSPM. Through the onboarding process, SSPM logs in to

Databricks using administrator account credentials. SSPM uses this account to scan

your Databricks instance for misconfigured settings. If there are misconfigured

settings, SSPM suggests a remediation action based on best practices.

SSPM gets access to your Databricks instance by using Okta SSO or Microsoft Azure

credentials that you provide during the onboarding process. For this reason, your

organization must be using Okta or Microsoft Azure as an identity provider. The Okta

or Microsoft Azure account must be configured for multi-factor authentication (MFA)

using one-time passcodes.

To onboard your Databricks instance, you complete the following actions:

- Collect information for accessing your Databricks instance.To access your Databricks instance, SSPM requires the following information, which you will specify during the onboarding process.

Item Description Username The username or email address of the account that SSPM will use to access your Databricks instance. The format that you use can depend on whether SSPM will be logging in directly to your account or through an identity provider. Required Permissions: The user must be a Databricks administrator.Password The password for the login account. If you're logging in through Okta, you must provide SSPM with the following additional information:Item Description Okta subdomain The Okta subdomain for your organization. The subdomain was included in the login URL that Okta assigned to your organization. Okta 2FA secret A key that is used to generate one-time passcodes for MFA. If you're using Azure Active Directory (AD) as your identity provider, you must provide SSPM with the following additional information:Item Description Azure 2FA secret A key that is used to generate one-time passcodes for MFA. As you complete the following steps, make note of the values of the items described in the preceding tables. You will need to enter these values during onboarding to access your Databricks instance from SSPM.- Identify the account that SSPM will use to access your Databricks instance. The user account must have administrator privileges in Databricks. SSPM needs this administrator access to monitor your Databricks instance.Get a secret key for MFA.The steps you follow to get the MFA secret key differ depending on the identity provider you're using to access the account.

- (For Okta log in) To access the account through Okta:

- (For Microsoft Azure log in) To access the account through Microsoft Azure:

Connect SSPM to your Databricks instance.By adding a Databricks app in SSPM, you enable SSPM to connect to your Databricks instance.- Log in to Strata Cloud Manager.Select ConfigurationSaaS SecurityPosture SecurityApplicationsAdd Application and click the Databricks tile.On the Posture Security tab, Add New instance.Specify how you want SSPM to connect to your Databricks instance. SSPM can Log in with Okta or Log in with Azure.When prompted, provide SSPM with the login credentials and the information it needs for MFA.Connect.

Onboard a Databricks App to SSPM Using a Service Principal

Connect a Databricks instance to SSPM to detect posture risks.For SSPM to detect identity risks in your Databricks instance, you must onboard your Databricks instance to SSPM. Through the onboarding process, SSPM connects to a Databricks API by using a Databricks managed service principal that you create. After connecting to the Databricks API, SSPM runs identity scans of your Databricks instance to detect account risks.To onboard your Databricks instance, SSPM requires the following information, which you will specify during the onboarding process.Item Description Client ID SSPM will access a Databricks API through a service principal that you create in Databricks. Databricks generates the Client ID to uniquely identify this service principal.Client Secret SSPM will access a Databricks API through a service principal that you create in Databricks. Databricks generates the Client Secret, which SSPM uses to authenticate to the API.Account ID An alphanumeric string that uniquely identifies your Databricks account.Warehouse ID The unique identifier of the SQL warehouse that SSPM will use to query data from your Databricks instance.To onboard your Databricks instance, you complete the following actions:- Open a web browser to the Databricks Account Console login page and log in as an administrator assigned to both the Account Admin and Workspace Admin roles.Required permissions: You must be assigned to the Account Admin role in order to access the Account Console to locate your account ID, and to create a service principal at the account level. To create an SQL warehouse, you must also be assigned to the Workspace Admin role for the workspace where you will create the warehouse.Identify your account ID.

- In the upper-right corner of the console, locate and click your user icon or name.The drop-down menu includes your account ID.Copy your account ID and paste it into a text file.Don’t continue to the next step unless you have copied the account ID. You will provide this information to SSPM during the onboarding process.Create a Databricks managed service principal.A Databricks managed service principal is a non-human, programmatic identity that SSPM will use to scan your Databricks instance. When you create a service principal, Databricks generates and displays the programmatic credentials (Client ID and Client Secret) that SSPM will use to access the Databricks API.

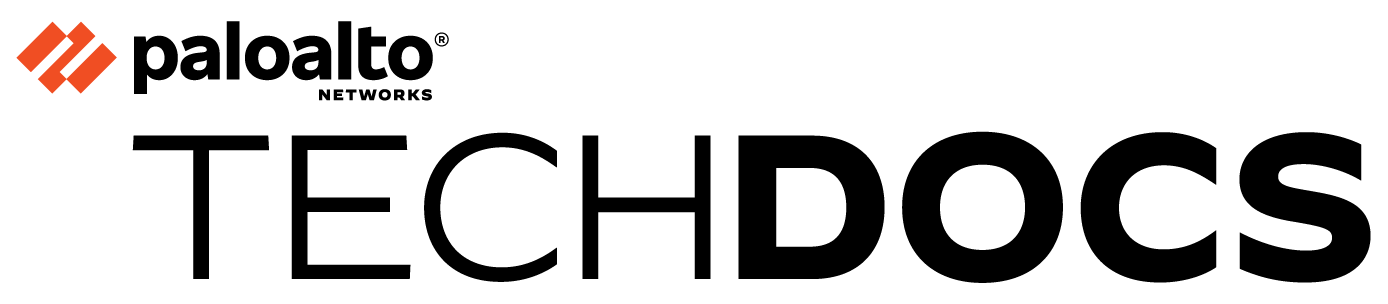

- Navigate to the User Management page. To do this, from the left navigation pane, select User management.On the User Management page, select the Service principals tab and Add service principal.In the Add Service Principal dialog, specify a name for the service principal.This name is the display name for the service principal. It will appear in searches and in the list of service principals on the Service principals tab. For these reasons, specify a meaningful name for the service principal. For example: SSPM Service Principal.

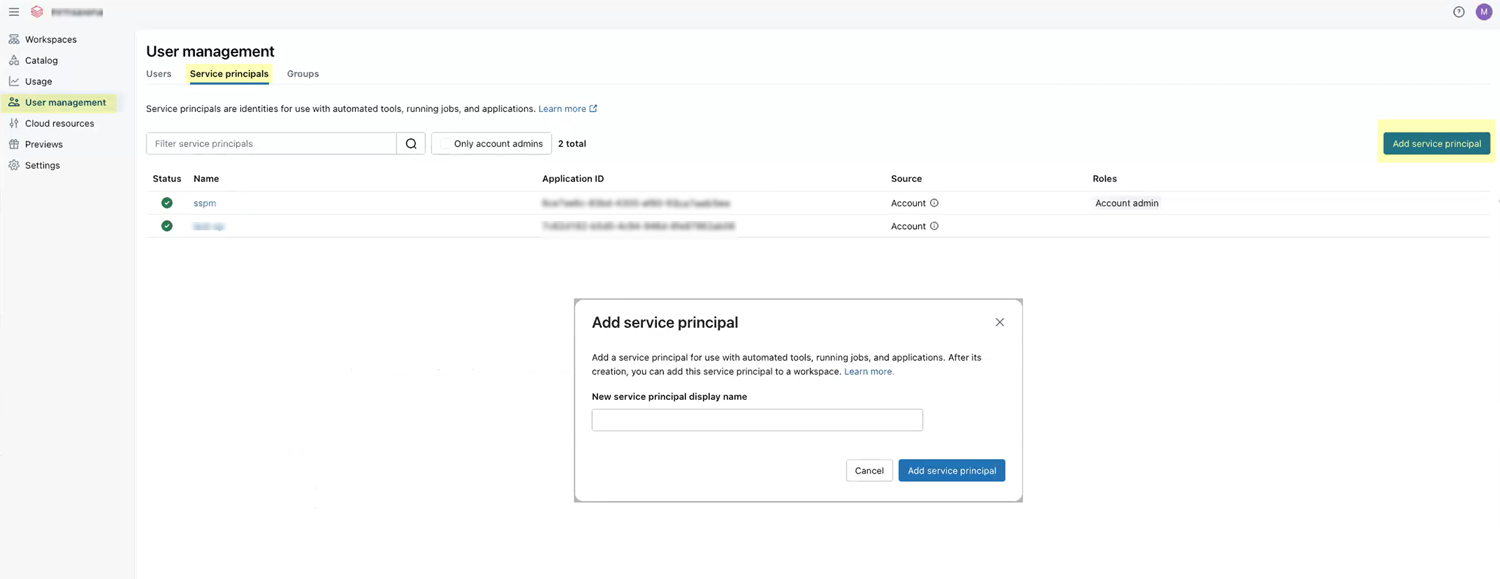

![]() To close the Add Service Principal dialog and create the service principal, click Add service principal.Databricks displays a configuration page for your new service principal. If Databricks does not display the configuration page for the service principal, you can open it from the list of service principals on the Service principals tab.On the configuration page for your service principal, select the Roles tab and select the Account admin role.In the configuration page for your service principal, select the Credentials & Secrets tab and Generate secret.In the Generate OAuth Secret dialog, specify an expiration period for the service principal and Generate the secret.Databricks displays the application credentials (Client ID and Client Secret) for your service principal.Copy the Client ID and Client Secret and paste them into a text file.Don’t continue to the next step unless you have copied the Client ID and Client Secret. You will provide this information to SSPM during the onboarding process.

To close the Add Service Principal dialog and create the service principal, click Add service principal.Databricks displays a configuration page for your new service principal. If Databricks does not display the configuration page for the service principal, you can open it from the list of service principals on the Service principals tab.On the configuration page for your service principal, select the Roles tab and select the Account admin role.In the configuration page for your service principal, select the Credentials & Secrets tab and Generate secret.In the Generate OAuth Secret dialog, specify an expiration period for the service principal and Generate the secret.Databricks displays the application credentials (Client ID and Client Secret) for your service principal.Copy the Client ID and Client Secret and paste them into a text file.Don’t continue to the next step unless you have copied the Client ID and Client Secret. You will provide this information to SSPM during the onboarding process.![]() Create an SQL warehouse in Databricks.If you already have an SQL warehouse, you don’t need to complete this step. It's not necessary to create a warehouse exclusively for SQL queries from SSPM. If you want to use an existing SQL warehouse, simply provide its warehouse ID to SSPM during onboarding.The SQL warehouse you create will provide SSPM with the compute resources needed to run SQL queries on your Databricks instance. Because some Databricks billing is tied to warehouse usage, SSPM scans will add extra costs. See Warehouse Cost Estimates for some rough estimates of these extra costs.

Create an SQL warehouse in Databricks.If you already have an SQL warehouse, you don’t need to complete this step. It's not necessary to create a warehouse exclusively for SQL queries from SSPM. If you want to use an existing SQL warehouse, simply provide its warehouse ID to SSPM during onboarding.The SQL warehouse you create will provide SSPM with the compute resources needed to run SQL queries on your Databricks instance. Because some Databricks billing is tied to warehouse usage, SSPM scans will add extra costs. See Warehouse Cost Estimates for some rough estimates of these extra costs.- Navigate to a workspace where you will create the SQL warehouse.If you have multiple workspaces, you do not need to create an SQL warehouse in each of those workspaces. Create the SQL warehouse in any one of your workspaces. Because all workspaces are linked to a central Unity Catalog metastore in Databricks, the warehouse will be able to query data across workspaces.

- From the left navigation pane, select Workspaces.

- On the Workspaces page, click the link for the workspace where you will create the warehouse.

- On the selected workspace's page, click the URL link for the workspace.

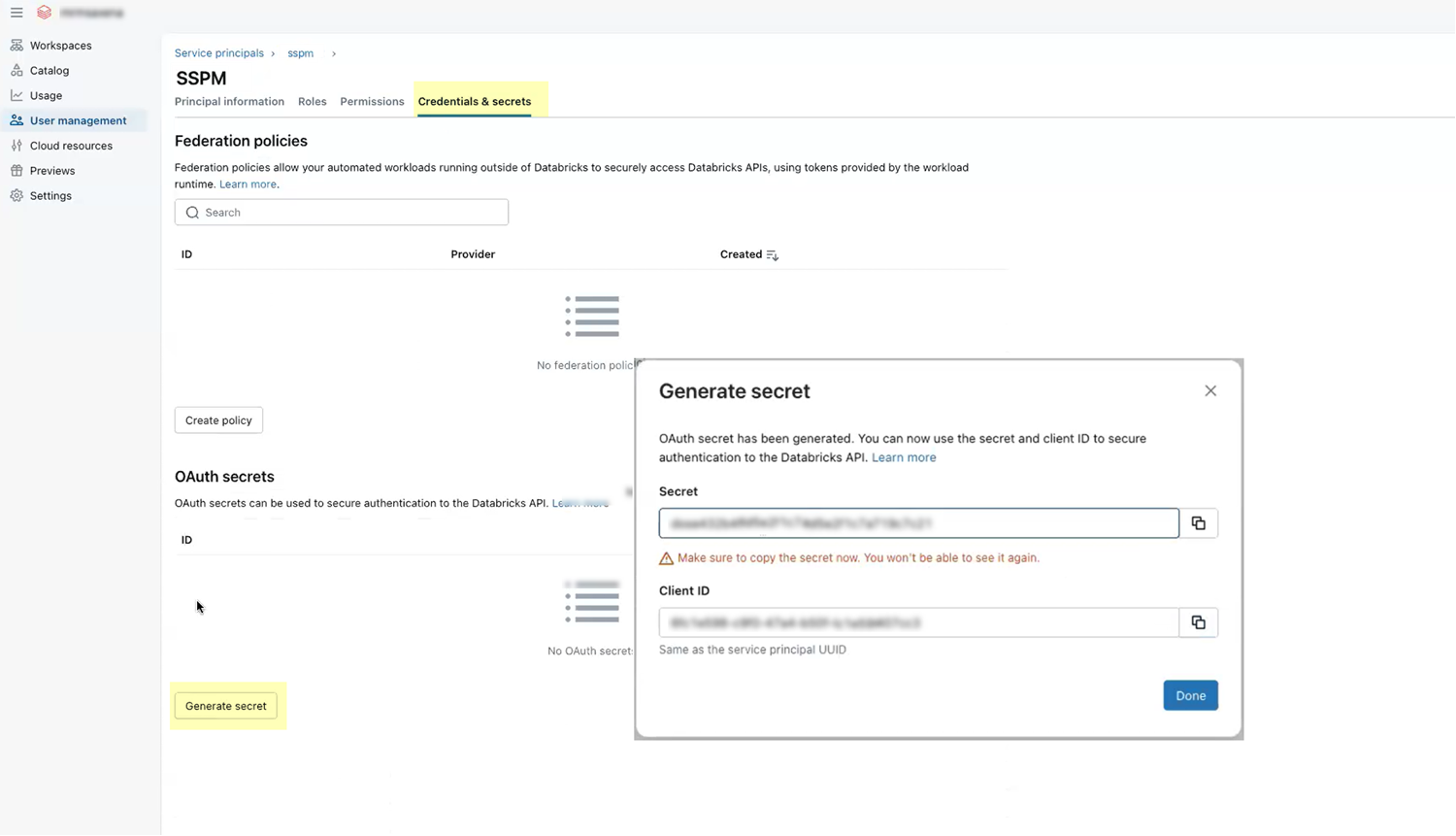

From the left navigation pane, select SQL Warehouses.Databricks opens the Compute page at the SQL Warehouses tab.On the SQL Warehouses tab, click the Create SQL Warehouse button.In the New SQL Warehouse dialog, complete the following actions:- Specify a Name for the warehouse. For example, SSPM Warehouse.

- Specify a Cluster size. The minimum requirement for running SQL queries from SSPM is 2X-Small.

- Set the Auto stop time to 5 minutes.

- Create the SQL warehouse.Databricks creates the SQL warehouse and displays an Overview of the SQL warehouse's properties.

- From the overview page for your new SQL warehouse, copy the

warehouse ID and paste it into a text file. The overview page

displays the warehouse ID next to the warehouse name that you

provided.Don’t continue to the next step unless you have copied the warehouse ID. You will provide this information to SSPM during the onboarding process.

![]()

Grant your service principal permission to execute queries on the warehouse.- From the overview page for your SQL warehouse, select Permissions.

- Use the search field in the Manage Permissions dialog to select the service principal.

- Set the service principal's permission to Can use.

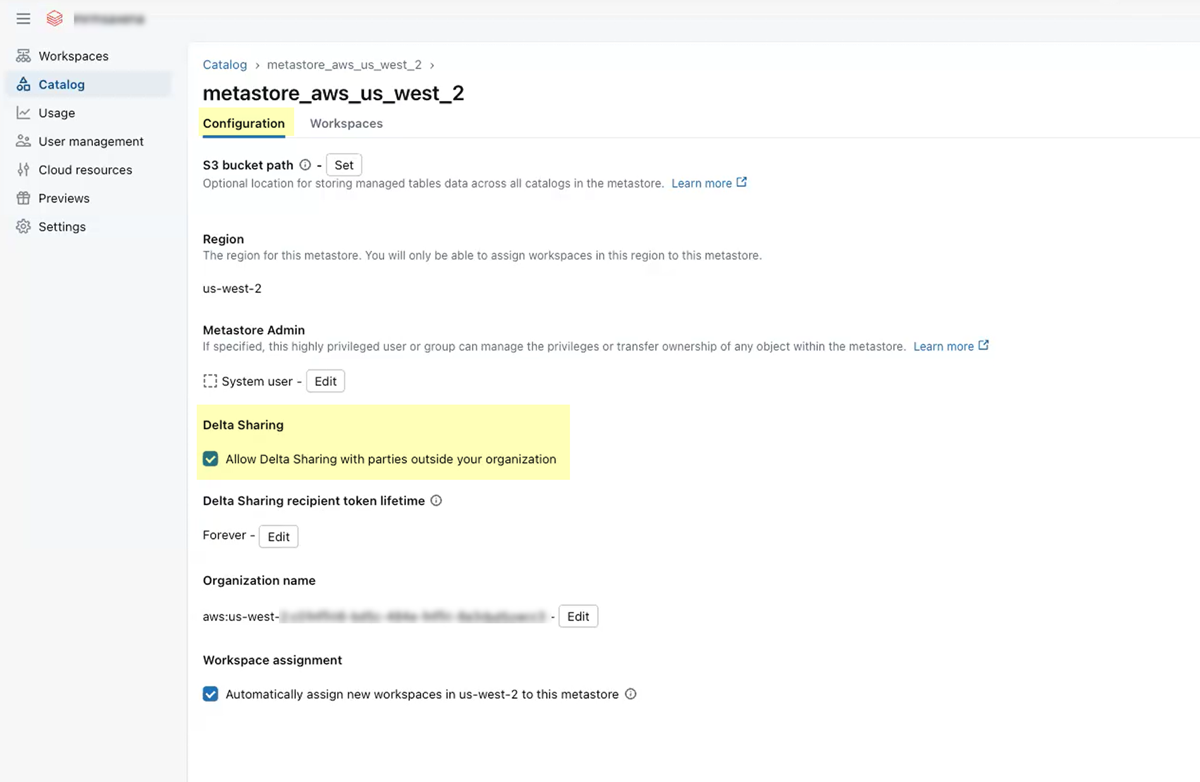

From the overview page for your SQL warehouse, Start the warehouse.Enable Delta Sharing for your Databricks workspaces. From the left navigation pane, select Workspaces to open the Workspaces page.Repeat the following steps for each of your workspaces:- On the Workspaces page, click the link for the workspace's Metastore.On the Configuration tab for the metastore, select the check box to Allow Delta Sharing with parties outside your organization.

![]() Connect SSPM to your Databricks instance.In SSPM, complete the following steps to enable SSPM to connect to your Databricks instance.

Connect SSPM to your Databricks instance.In SSPM, complete the following steps to enable SSPM to connect to your Databricks instance.- Log in to Strata Cloud Manager.Select ConfigurationSaaS SecurityPosture SecurityApplicationsAdd Application and click the Databricks tile.On the Posture Security tab, Add New instance.Log in with Credentials.Enter the programmatic credentials (Client ID and Client Secret), your account ID, and the warehouse ID.Connect.

Warehouse Cost Estimates

SSPM scans your Databricks instance by issuing SQL queries, which are processed through your SQL warehouse. Based on the compute resources used to satisfy your SQL queries, your account will be billed for additional Databricks Units (DBUs). The following table provides a rough estimate of the additional costs that SSPM scans will incur based on the number and duration of scans. These are rough estimates only and your actual usage and costs will vary.Scenario Query Runtime Idle Buffer On-Time Per Scan DBU Per Scan Monthly DBU (4 Scans Per Day) $ @ $0.70/DBU* Worst-case 120 s (Assuming 100k logs) 300 s 420 s 4 DBU/h × 420/3600 = 0.47 0.47 × 4 x 30 DBU ≈ 57 DBU ≈ $40 / month Best-case 10 s 300 s 310 s 4 DBU/h × 310/3600 = 0.34 0.34 × 4 x 30 ≈ 41 DBU ≈ $28.7 / month