Deploy the GCP Active/Passive HA

Table of Contents

Expand all | Collapse all

-

- VM-Series Deployments

- VM-Series in High Availability

- IPv6 Support on Public Cloud

- Enable Jumbo Frames on the VM-Series Firewall

- Hypervisor Assigned MAC Addresses

- Custom PAN-OS Metrics Published for Monitoring

- Interface Used for Accessing External Services on the VM-Series Firewall

- PacketMMAP and DPDK Driver Support

- Enable NUMA Performance Optimization on the VM-Series

- Enable ZRAM on the VM-Series Firewall

-

- VM-Series Firewall Licensing

- Create a Support Account

- Serial Number and CPU ID Format for the VM-Series Firewall

- Use Panorama-Based Software Firewall License Management

-

- Maximum Limits Based on Tier and Memory

- Activate Credits

- Create a Deployment Profile

- Activate the Deployment Profile

- Manage a Deployment Profile

- Register the VM-Series Firewall (Software NGFW Credits)

- Provision Panorama

- Migrate Panorama to a Software NGFW License

- Transfer Credits

- Renew Your Software NGFW Credits

- Deactivate License (Software NGFW Credits)

- Delicense Ungracefully Terminated Firewalls

- Set the Number of Licensed vCPUs

- Customize Dataplane Cores

- Migrate a Firewall to a Flexible VM-Series License

-

- Generate Your OAuth Client Credentials

- Manage Deployment Profiles Using the Licensing API

- Create a Deployment Profile Using the Licensing API

- Update a Deployment Profile Using the Licensing API

- Get Serial Numbers Associated with an Authcode Using the API

- Deactivate a VM-Series Firewall Using the API

- What Happens When Licenses Expire?

-

- Supported Deployments on VMware vSphere Hypervisor (ESXi)

-

- Plan the Interfaces for the VM-Series for ESXi

- Provision the VM-Series Firewall on an ESXi Server

- Perform Initial Configuration on the VM-Series on ESXi

- Add Additional Disk Space to the VM-Series Firewall

- Use VMware Tools on the VM-Series Firewall on ESXi and vCloud Air

- Use vMotion to Move the VM-Series Firewall Between Hosts

- Use the VM-Series CLI to Swap the Management Interface on ESXi

-

-

- Supported Deployments of the VM-Series Firewall on VMware NSX-T (North-South)

- Components of the VM-Series Firewall on NSX-T (North-South)

-

- Install the Panorama Plugin for VMware NSX

- Enable Communication Between NSX-T Manager and Panorama

- Create Template Stacks and Device Groups on Panorama

- Configure the Service Definition on Panorama

- Deploy the VM-Series Firewall

- Direct Traffic to the VM-Series Firewall

- Apply Security Policy to the VM-Series Firewall on NSX-T

- Use vMotion to Move the VM-Series Firewall Between Hosts

- Extend Security Policy from NSX-V to NSX-T

-

- Components of the VM-Series Firewall on NSX-T (East-West)

- VM-Series Firewall on NSX-T (East-West) Integration

- Supported Deployments of the VM-Series Firewall on VMware NSX-T (East-West)

-

- Install the Panorama Plugin for VMware NSX

- Enable Communication Between NSX-T Manager and Panorama

- Create Template Stacks and Device Groups on Panorama

- Configure the Service Definition on Panorama

- Launch the VM-Series Firewall on NSX-T (East-West)

- Add a Service Chain

- Direct Traffic to the VM-Series Firewall

- Apply Security Policies to the VM-Series Firewall on NSX-T (East-West)

- Use vMotion to Move the VM-Series Firewall Between Hosts

-

- Install the Panorama Plugin for VMware NSX

- Enable Communication Between NSX-T Manager and Panorama

- Create Template Stacks and Device Groups on Panorama

- Configure the Service Definition on Panorama

- Launch the VM-Series Firewall on NSX-T (East-West)

- Create Dynamic Address Groups

- Create Dynamic Address Group Membership Criteria

- Generate Steering Policy

- Generate Steering Rules

- Delete a Service Definition from Panorama

- Migrate from VM-Series on NSX-T Operation to Security Centric Deployment

- Extend Security Policy from NSX-V to NSX-T

- Use In-Place Migration to Move Your VM-Series from NSX-V to NSX-T

-

-

- Deployments Supported on AWS

-

- Planning Worksheet for the VM-Series in the AWS VPC

- Launch the VM-Series Firewall on AWS

- Launch the VM-Series Firewall on AWS Outpost

- Create a Custom Amazon Machine Image (AMI)

- Encrypt EBS Volume for the VM-Series Firewall on AWS

- Use the VM-Series Firewall CLI to Swap the Management Interface

- Enable CloudWatch Monitoring on the VM-Series Firewall

- VM-Series Firewall Startup and Health Logs on AWS

- Simplified Onboarding of VM-Series Firewall on AWS

-

- Use AWS Secrets Manager to Store VM-Series Certificates

- AWS Shared VPC Monitoring

- Use Case: Secure the EC2 Instances in the AWS Cloud

- Use Case: Use Dynamic Address Groups to Secure New EC2 Instances within the VPC

-

- Intelligent Traffic Offload

- Software Cut-through Based Offload

-

- Deployments Supported on Azure

- Deploy the VM-Series Firewall from the Azure Marketplace (Solution Template)

- Deploy the VM-Series Firewall from the Azure China Marketplace (Solution Template)

- Deploy the VM-Series with the Azure Gateway Load Balancer

- Create a Custom VM-Series Image for Azure

- Deploy the VM-Series Firewall on Azure Stack

- Deploy the VM-Series Firewall on Azure Stack HCI

- Enable Azure Application Insights on the VM-Series Firewall

- Set up Active/Passive HA on Azure

- Use Azure Key Vault to Store VM-Series Certificates

- Use the ARM Template to Deploy the VM-Series Firewall

-

- About the VM-Series Firewall on Google Cloud Platform

- Supported Deployments on Google Cloud Platform

- Create a Custom VM-Series Firewall Image for Google Cloud Platform

- Prepare to Set Up VM-Series Firewalls on Google Public Cloud

-

- Deploy the VM-Series Firewall from Google Cloud Platform Marketplace

- Management Interface Swap for Google Cloud Platform Load Balancing

- Use the VM-Series Firewall CLI to Swap the Management Interface

- Enable Google Stackdriver Monitoring on the VM Series Firewall

- Enable VM Monitoring to Track VM Changes on Google Cloud Platform (GCP)

- Use Dynamic Address Groups to Secure Instances Within the VPC

- Use Custom Templates or the gcloud CLI to Deploy the VM-Series Firewall

-

- Prepare Your ACI Environment for Integration

-

-

- Create a Virtual Router and Security Zone

- Configure the Network Interfaces

- Configure a Static Default Route

- Create Address Objects for the EPGs

- Create Security Policy Rules

- Create a VLAN Pool and Domain

- Configure an Interface Policy for LLDP and LACP for East-West Traffic

- Establish the Connection Between the Firewall and ACI Fabric

- Create a VRF and Bridge Domain

- Create an L4-L7 Device

- Create a Policy-Based Redirect

- Create and Apply a Service Graph Template

-

- Create a VLAN Pool and External Routed Domain

- Configure an Interface Policy for LLDP and LACP for North-South Traffic

- Create an External Routed Network

- Configure Subnets to Advertise to the External Firewall

- Create an Outbound Contract

- Create an Inbound Web Contract

- Apply Outbound and Inbound Contracts to the EPGs

- Create a Virtual Router and Security Zone for North-South Traffic

- Configure the Network Interfaces

- Configure Route Redistribution and OSPF

- Configure NAT for External Connections

-

-

- Choose a Bootstrap Method

- VM-Series Firewall Bootstrap Workflow

- Bootstrap Package

- Bootstrap Configuration Files

- Generate the VM Auth Key on Panorama

- Create the bootstrap.xml File

- Prepare the Licenses for Bootstrapping

- Prepare the Bootstrap Package

- Bootstrap the VM-Series Firewall on AWS

- Bootstrap the VM-Series Firewall on Azure

- Bootstrap the VM-Series Firewall on Azure Stack HCI

- Bootstrap the VM-Series Firewall on Google Cloud Platform

- Verify Bootstrap Completion

- Bootstrap Errors

End-of-Life (EoL)

Deploy the GCP Active/Passive HA

You can use the following procedures manage

your existing deployment profiles.

Preparing to set-up an active/passive HA in GCP

- Enable the required APIs, generate an SSH key, and clone the Github repository using:gcloud services enable compute.googleapis.com ssh-keygen -f ~/.ssh/vmseries-tutorial -t rsa git clone https://github.com/PaloAltoNetworks/google-cloud-vmseries-ha-tutorial cd google-cloud-vmseries-ha-tutorialCreate a terraform.tfvars file.cp terraform.tfvars.example terraform.tfvarsEdit the new terraform.tfvars file and set variables for the following variables:

Variable Description project_id Set to your Google Cloud deployment project. public_key_path Set to match the full path you created previously. mgmt_allow_ips Set to a list of IPv4 ranges that can access the VM-Series management interface. prefix (Optional) If set, this string will be prepended to the created resources. vmseries_image_name (Optional) Defines the VM-Series image to deploy. A full list of images can be found here. (Optional) If you are using BYOL image (i.e. vmseries-flex-byol-*), the license can be applied during deployment by adding your VM-Series authcode to bootstrap_files/authcodesSave your terraform.tfvars file.Deploying the GCP Active/Passive HA

- Initialize and apply the Terraform plan.terraform init terraform applyEnter yes to start the deployment. After all the resources are created, the Terraform displays the following message:Apply complete! Outputs: EXTERNAL_LB_IP = "ssh paloalto@1.1.1.1 -i ~/.ssh/vmseries-tutorial" EXTERNAL_LB_URL = "https://1.1.1.1" VMSERIES_ACTIVE = "https://2.2.2.2" VMSERIES_PASSIVE = "https://3.3.3.3"All the infrastructure should now be deployed and will boot up and configure by itself. Visit the external_nat_ip by using http://x.x.x.x after a few minutes after the deployment to find the default webpage from the workload-vm.

Testing the GCP Active/Passive HA deployment

You can now test the deployment by accessing the workload-vm that resides in the trust VPC network. All of the workload-vm traffic is routed directly through the VM-Series HA pair.- Use the output EXTERNAL_LB_URL to access the web service on the workload-vm through the VM-Series firewall.gcloud compute ssh workload-vmUse the output EXTERNAL_LB_SSH to open an SSH session through the VM-Series to the workload-vm.ssh paloalto@1.1.1.1 -i ~/.ssh/vmseries-tutorialRun a preloaded script on the workload VM, to test the failover mechanism across the VM-Series firewalls./network-check.shYou will observe an output similar to the codeblock below, where x.x.x.x is the IP address is EXTERNAL_LB_IP address.Wed Mar 12 16:40:18 UTC 2023 -- Online -- Source IP = x.x.x.x Wed Mar 12 16:40:19 UTC 2023 -- Online -- Source IP = x.x.x.x Wed Mar 12 16:40:20 UTC 2023 -- Online -- Source IP = x.x.x.x Wed Mar 12 16:40:21 UTC 2023 -- Online -- Source IP = x.x.x.xLogin to the VM-Series firewalls using the VMSERIES_ACTIVE and VMSERIES_PASSIVE output values. Notice the HA Status of the firewalls in the bottom right hand corner of the management window.Perform a user initiated failover.

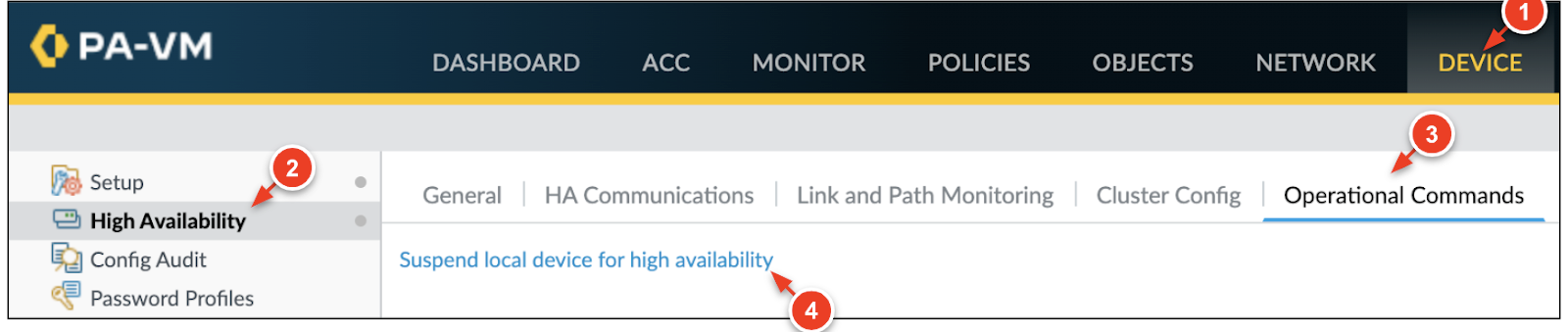

- On the Active firewall, click the Device > High Availabilty > Operational Commands.

- Click Suspend local device for high

availability.

![]()

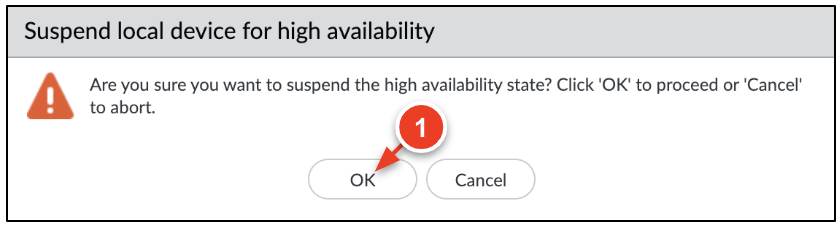

- When prompted, click OK to initiate the

failover.

![]()

- You may notice that the SSH session to the

workload-vm is still active. This indicates the

session successfully failed over between the VM-Series firewalls.

The script output should also display the same source IP

address.Wed Mar 12 16:47:18 UTC 2023 -- Online -- Source IP = x.x.x.x Wed Mar 12 16:47:19 UTC 2023 -- Online -- Source IP = x.x.x.x Wed Mar 12 16:47:21 UTC 2023 -- Offline Wed Mar 12 16:47:22 UTC 2023 -- Offline Wed Mar 12 16:47:23 UTC 2023 -- Online -- Source IP = x.x.x.x Wed Mar 12 16:47:24 UTC 2023 -- Online -- Source IP = x.x.x.x

Onboarding Internet Applications

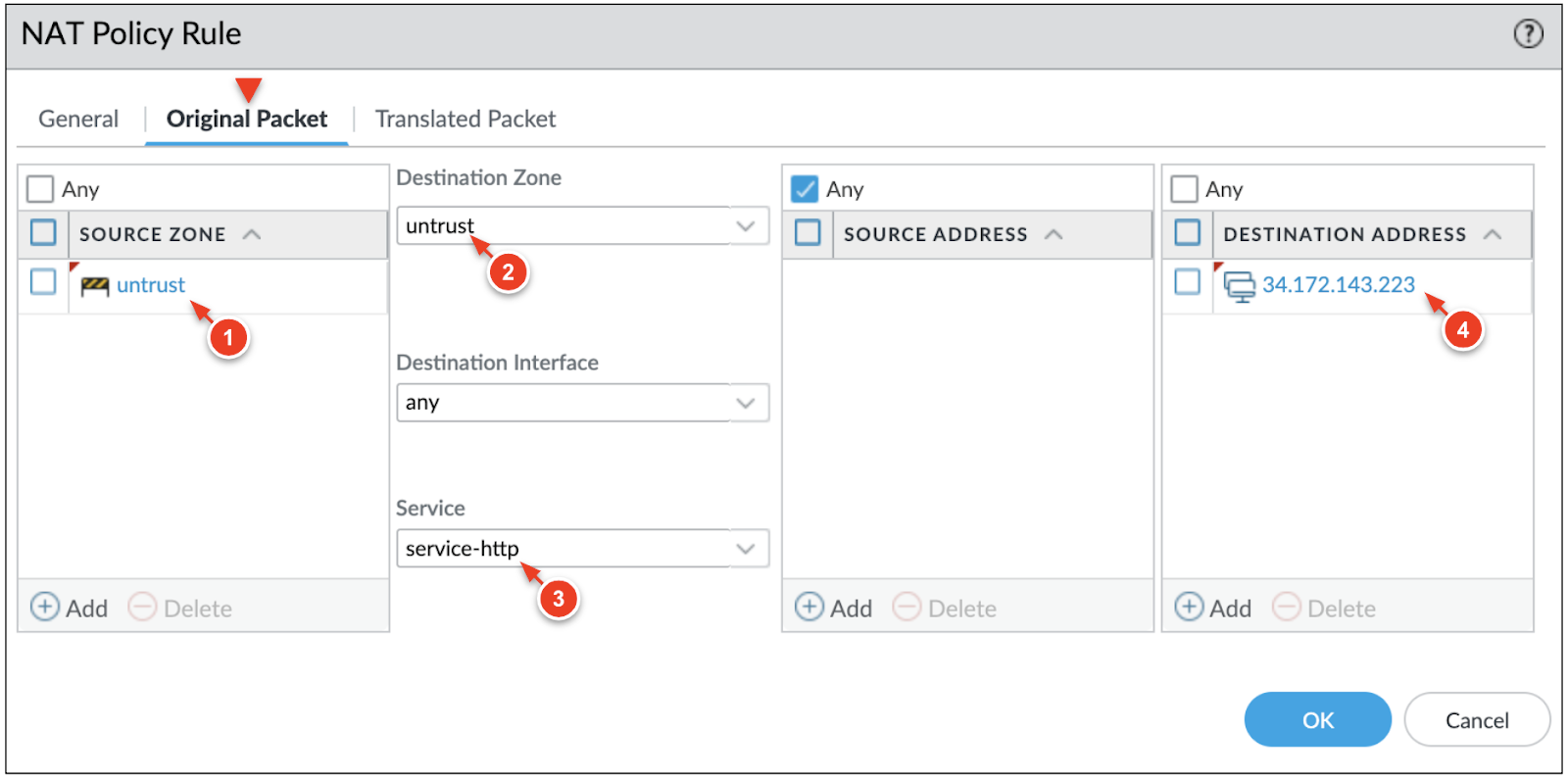

You can onboard and secure multiple internet facing applications through the VM-Series firewall. This is done by mapping forwarding rules on the external load balancer to NAT policies defined on the VM-Series firewall. - In Cloud Shell, deploy a virtual machine into a subnet within the trust VPC network. The virtual machine in this example runs a sample application for you.gcloud compute instances create my-app2 \ --network-interface subnet="panw-us-central1-trust",no-address \ --zone=us-central1-a \ --image-project=panw-gcp-team-testing \ --image=ubuntu-2004-lts-apache-ac \ --machine-type=f1-microRecord the INTERNAL_IP address of the new virtual machine.name: my-app2 ZONE: us-central1-a MACHINE_TYPE: f1-micro PREEMPTIBLE: INTERNAL_IP: 10.0.2.4 EXTERNAL_IP: status: RUNNINGCreate a new forwarding rule on the external TCP load balancer.gcloud compute forwarding-rules create panw-vmseries-extlb-rule2 \ --load-balancing-scheme=EXTERNAL \ --region=us-central1 \ --ip-protocol=L3_DEFAULT \ --ports=ALL \ --backend-service=panw-vmseries-extlbRetrieve and record the address of the new forwarding rule.gcloud compute forwarding-rules describe panw-vmseries-extlb-rule2 \ --region=us-central1 \ --format='get(IPAddress)'(output)34.172.143.223On the active VM-Series, click Policies > NAT > Add and enter a name for the rule.Configure the Original Packet as follows:

- Source Zone: untrust

- Destination Zone: untrust

- service: service-http

- Destination Address: Set to the forwarding rule's IP address (i.e. 34.172.143.223).

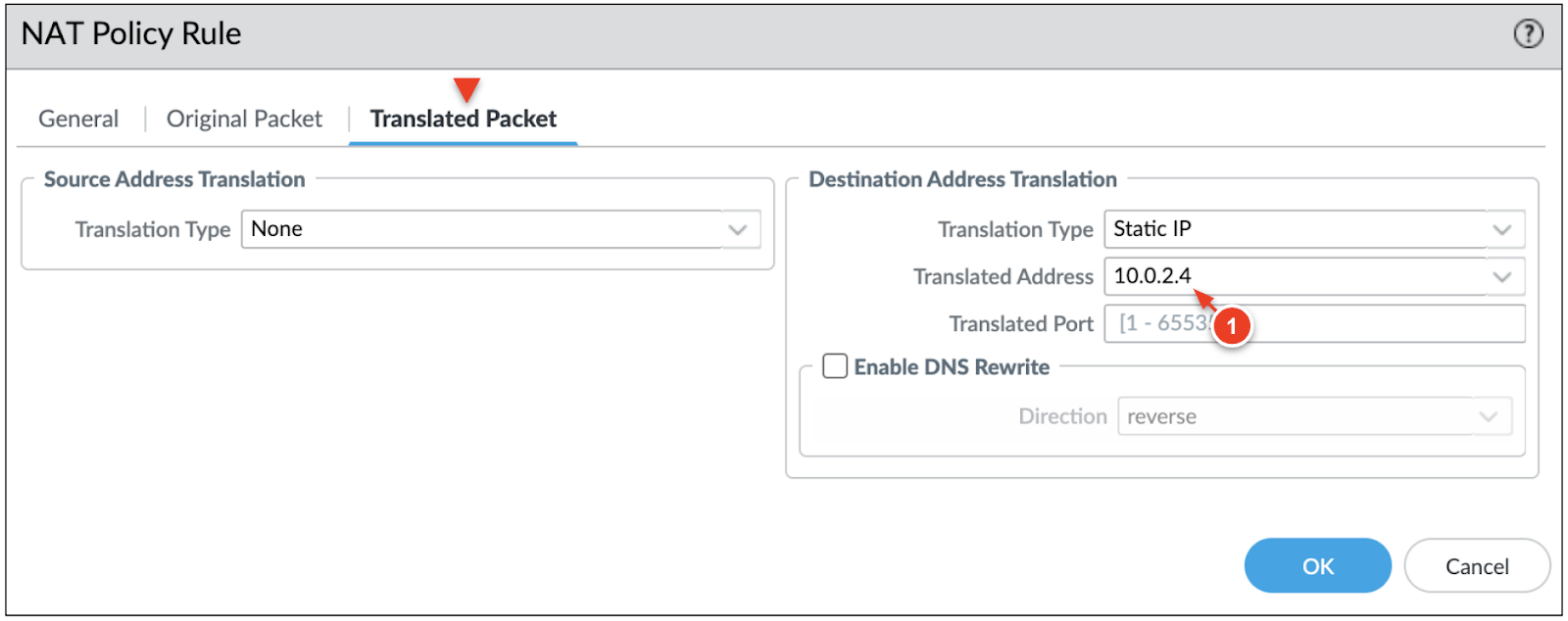

![]() In the Translated Packet tab, configure the Destination Address Translation as follows:

In the Translated Packet tab, configure the Destination Address Translation as follows:- Translated Type: Static IP

- Translated Address: Set to the INTERNAL_IP of the sample application (i.e. 10.0.2.4).

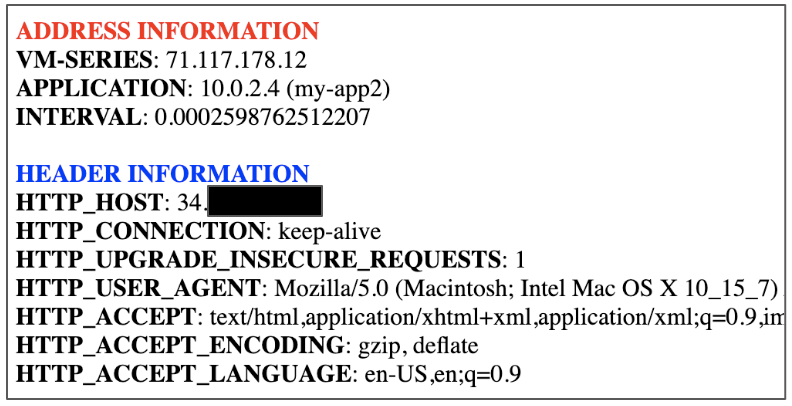

![]() Click OK and commit the changes.Access the sample application using the forwarding rule's address.http://34.172.143.223/

Click OK and commit the changes.Access the sample application using the forwarding rule's address.http://34.172.143.223/![]()

Deleting the Resources

You can delete all the resources when you no longer need them. - (Optional) If you onboarded an additional application, delete the forwarding rule and sample application machine.gcloud compute forwarding-rules delete panw-vmseries-extlb-rule2 \ --region=us-central1 gcloud compute instances delete my-app2 \ --zone=us-central1-aDelete the Terraform using the command:terraform destroyAt the prompt to perform the actions, enter yes. After all the resources are deleted, Terraform displays the following message:Destroy complete!